If your data pipeline serves up bad data to a business stakeholder, one of three things will happen:

They realize it’s wrong right away, and lose confidence in the data and data team.

They don’t realize it’s wrong, take action based on it, find out it was wrong later… and then lose confidence in the data and data team.

They don’t realize it’s wrong, take action, and no one ever realizes. Maybe the outcome is the same as if the data had been correct, maybe not. But no one knows why what happened, happened—their confidence in their decision is misplaced.

With a few tweaks, all of the above go for your customers, too. And when your customers lose confidence in you, they start being your competitors’ customers instead.

Your data pipeline might be delivering the data, but testing your pipeline’s functionality can only do so much. Because a lot of data quality issues have absolutely nothing to do with your pipeline; they originate at the source of your data, before it reaches you.

But regardless of their source, data quality troubles are inevitable, and you need to handle them before stakeholders and customers ever see them.

The best way to do that is with data validation.

Data validation in the pipeline gives data teams peace of mind that no matter where errors originate, problematic data won’t reach end users.

This article belongs to a series exploring how you can use the Great Expectations data platform to integrate data validation into your pipelines. We’ll cover what data to validate, where validation should go, and the features of GX that are most useful at every step.

In this article, we’re looking at where data validation should go.

Why validate throughout the data pipeline

We’ve already established that you need to resolve data quality issues before a business stakeholder encounters them.

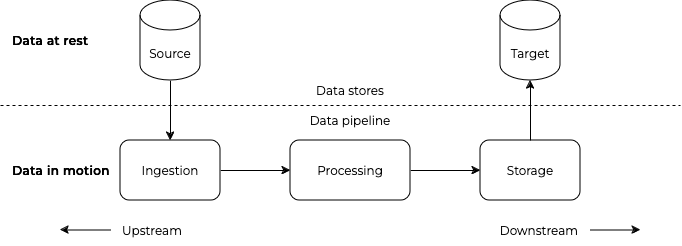

Stakeholders are most likely to encounter that’s at rest: that is, it’s at the end of its particular journey through your data pipeline. This is the Target data store in the illustration below.

If we want to prevent stakeholders from encountering data quality issues, we need to resolve them before the data comes to rest — ie, when the data is moving through the pipeline.

Even if we take stakeholders out of the equation, though, logic still points to testing in the pipeline.

By definition, data at rest in a target store isn’t changing anymore. Any data quality issues are extremely likely to have originated upstream—with a few exceptions, like misalignment on definitions.

When you catch an issue after the data has come to rest, you need to backtrace its entire journey and reverse engineer all its transformations until you’ve identified the issue’s source. Then you have to trace forward all of the places that the data went, and implement a backfill process to fix it or do manual repairs. That’s a lot of time and effort.

When you catch an issue while data is in motion, you’re closer to the issue’s source. You might still need to do some backtracking and reverse engineering, but you know the scope from the outset based on the last set of checks that it passed. You can also stop the problematic data from moving downstream until you’ve fixed it.

You’re going to have to spend some resources on resolving data quality issues no matter what. But it’s clear that you’ll have to spend fewer of them when you’re doing data quality monitoring throughout your pipeline, on data in motion.

Often, the “throughout the pipeline” approach to data validation is called shift-left testing, or just shift left. The language is borrowed from software testing, but the concept is the same: the resource burden of fixing errors is reduced when you test early and often.

This is by no means a novel concept—consider “an ounce of prevention is worth a pound of cure,” written by Benjamin Franklin in 1735.

Even in the more modern sense of the quality of data, the 1–10–100 rule for quantifying the cost of bad data isn’t new. And if a data quality problem cost $1 to prevent, $10 to fix, and lost you $100 if you didn’t do anything about it in 1992… you can imagine how steep that cost increase might look now.

Where to validate data in your pipeline

Data quality issues can be introduced anywhere in a pipeline: at data input, during ingestion, and post-ingestion during transformations and other processing. Identifying hotspots for data quality issue origination lets you deploy your data validation strategically, so that you can get insight into the most critical spots immediately.

Following the shift-left principle, the first hotspot to check is data ingestion.

Unexpected schema changes are one of the most common sources of data quality issues, which is one reason the idea of the data contract gained traction so quickly. Schema validation alone will let you detect a significant source of quality errors, even before you consider other types of data quality checks.

The next series of hotspots is after each transformation step.

This approach is similar to “black box testing” of software engineering. With a post-transformation validation, you confirm that the transformation’s output conforms to what downstream consumers are expecting.

Typical checks for post-transformation include schema validation, distribution of numerical values, and relationships between columns.

It can also be useful to add data validation before transformation steps. This allows you, firstly, to ensure that your transformation will be getting the data you expect it to get.

In combination with post-transformation checks, pre-transformation data validation allows you to monitor the behavior of the transformation itself. Rather than checking only that the transformation produced reasonable data, you can confirm that it produced the correct data for the exact set of given inputs.

Many organizations apply the medallion architecture, as popularized by Databricks. Setting data quality checks before data passes into the gold (or consumption) layer allows you to verify that the data conforms to the needs and business rule expectations of that specific layer’s consumers.

There are multiple architectural implementations that allow you to perform data validation, including the popular Write-Audit-Publish (WAP) approach.

What to validate in the data pipeline

Types of validations

Data quality is a multifaceted problem. To communicate meaningfully about a dataset’s current state and health, you need a framework against which state and health can be defined.

This assessment framework is typically defined using characteristics of the data, also known as data quality dimensions.

Common data quality dimensions used for assessment include:

Accuracy. How well data represents entities or events from reality.

Completeness. All required data is present with no gaps.

Consistency. Uniformity of data representation and measurements within a dataset or across different datasets.

Timeliness. Data is present in the expected place, in the proper format, at the right time.

Uniqueness. Each data point within a dataset represents a different entity or event.

Validity. Data conforms to the expected format, type, or range of acceptable values.

Different frameworks may use slightly different sets of dimensions and/or define them in slightly different ways, but these six—accuracy, completeness, consistency, timeliness, uniqueness, and validity—are a solid and widely-recognizable foundation to use as you explore options for data validation.

How to start

To implement effective data quality monitoring, you need to understand the dataset and its intended uses, not just blindly implement statistical monitoring.

The first step in understanding a new dataset is to do data profiling.

A simple profile can give you key information about the data’s state, and allows you to begin assessing whether existing validations and assumptions about the data’s state are effective or accurate.

Once you have an initial idea of the data’s state through profiling, you need to prioritize what to test. In an ideal world, you would be able to test every dimension of the data at every step—but if this was an ideal world, there wouldn’t be data quality issues at all.

Here are some factors to consider as you prioritize your data quality work:

Downstream usage. What data does your company use for its most critical decision-making? What data has a lot of external visibility? What data does day-to-day operations depend on?

Business rules. Each column of your data exists in a business context, which means data that’s acceptable from a technological standpoint is still invalid operationally. Columns with complex business requirements could be high priority for data quality testing—especially if regulatory concerns are involved.

Stakeholders. The more stakeholders a data has, the more possibilities there are for data quality issues that originate from different understandings of the data. Involving stakeholders to understand what expectations each has for the data—and, crucially, why they have that expectation—is a process better started sooner than later.

For more considerations about setting priorities ahead of embarking on a data quality process, see our earlier blog post about where to start with data quality.

Summary

In this article, we explored:

Why data validation is critical

Why it should take place throughout your data pipeline

What aspects of data to validate

How to get started prioritizing your data validation work.

In other entries of this series, we’ll explore how the GX platform can be used to implement data validation according to the principles we established here, including key features from GX Core and GX Cloud.

Thanks to Bruno Gonzalez for his contributions to this article.